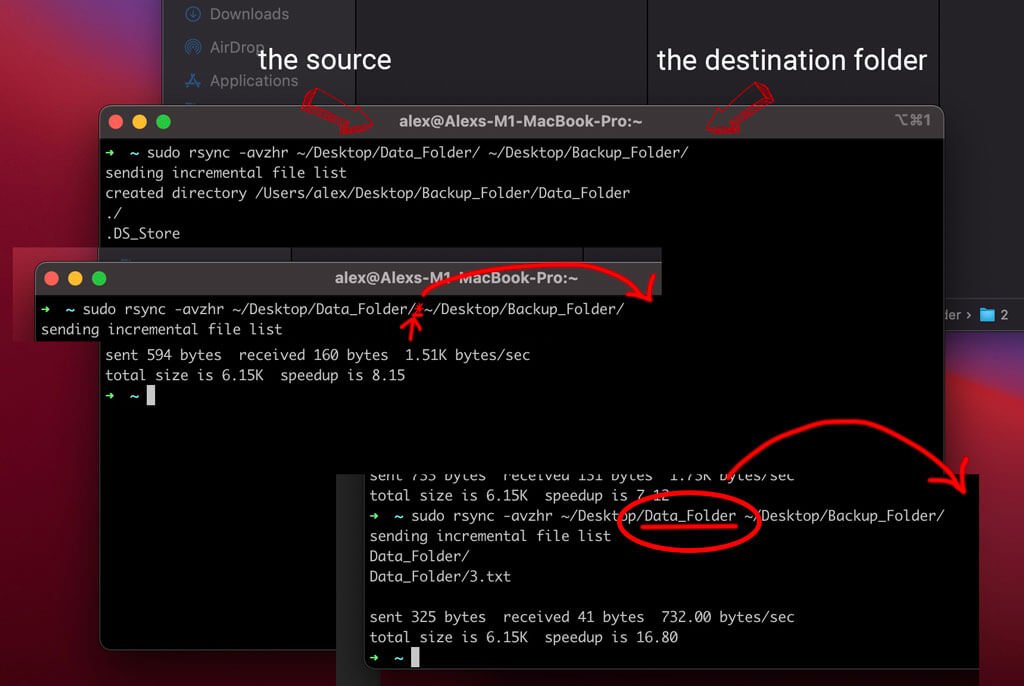

Sync Data_Folder and put the folder into Backup_Folder

rsync -avzhr ~/Data/Data_Folder username@backup_server:~/Backup_Folder/

Sync everything inside Data_Folder to backup server Data_Folder

rsync -avzhr ~/Data/Data_Folder/ username@backup_server:~/Backup_Folder/DataFolder/ | -a | archive mode, which allows copying files recursively and it also preserves symbolic links, file permissions, user & group ownerships, and timestamps. |

| -v | verbose |

| -z | compress file data. |

| -h | human-readable, output numbers in a human-readable format. |

| -r | copies data recursively (but don’t preserve timestamps and permission while transferring data. |

| –dry-run | Dry Run |

--exclude 'file.txt'

or

--exclude={'*.lrdata','dir2','dir3'}

Some Synology Diskstation folder to ignore

'#recycle','@eaDir'

-avzhr --exclude={'*.lrdata','#recycle','@eaDir','_SYNCAPP','.DS_Store','Thumbs.db','.picasa.ini','.TemporaryItems','.apdisk'}

node_modulessudo rsync -avzhr --exclude={'*.lrdata','#recycle','@eaDir','_SYNCAPP','.DS_Store','Thumbs.db','.picasa.ini','.TemporaryItems','.apdisk'} /var/services/homes/alexcpl/mount/photo/source /volume1/photos/Copy Directories on Linux

cp -R <source_folder> <destination_folder>example

cp -R /etc /etc_backupCopy Directory Content Recursively on Linux

cp -R <source_folder>/* <destination_folder>Copy multiple directories with cp

cp -R <source_folder_1> <source_folder_2> ... <source_folder_n> <destination_folder>example

cp -R /etc/* /home/* /backup_folderCopying using rsync

Make sure to include the “-r” option for “recursive” and the “-a” option for “all” (otherwise non-regular files will be skipped)

rsync -ar <source_folder> <destination_user>@<destination_host>:<path>example

rsync -ar /etc user@server:/etc_backupSimilarly, you can choose to copy the content of the “/etc/ directory rather than the directory itself by appending a wildcard character after the directory to be copied.

rsync -ar /etc/* user@server:/etc_backup/Finally, if you want to introduce the current date while performing a directory backup, you can utilize Bash parameter substitution.

rsync -ar /etc/* user@server:/etc_backup/etc_$(date "+%F")

Copying using scp

scp -r <source_folder> <destination_user>@<destination_host>:<path>example

scp -r /etc user@server:/etc_backup/scp -r /etc user@server:/etc_backup/etc_$(date "+%F")Backing up using tar and SSH to backup server

#bin/bash

tar -zcf /volume1/docker/pihole_$(date +"%Y-%m-%d_%H-%M-%S").tar.gz -C /volume1/docker pihole

rsync -a pihole*.tar.gz username@server:/volume1/backups/docker_backupsSometimes you on a server is nearly full cannot create a tar file on the disk then copy across network. Now you can do the following.

#bin/bash

tar -zcvf source_folder | ssh user@server "cat > /volume1/backups/backup_date.tgzto extract a .tgz file, you need to following command.

tar -xvzf /volume1/backups/backup_date.tgzAFSO backup script

#bin/bash

rsync -avzhr --progress --exclude={'#recycle','AFSOSERVER01_backup.hbk','@eaDir','_SYNCAPP','.DS_Store','Thumbs.db','.picasa.ini','.TemporaryItems','.apdisk'} csqadmin@192.168.1.31:/volume1/administration/ /volume1/administration/